Add S3 capabilities to Azure Blob Storage using Minio

First of all, why can't we use Azure API?

If you already use AWS S3 as an object storage and want to migrate your applications on Azure, you would like to reduce the risk of it. For that, you could move your object storage without changing a line of code in your application. This is the solution we were checking and use now for some of our services.

If you have problems uploading large files to Azure Blob Storage, like a MySQL Dump, the Azure API can limit you. For example, the PUT API support blob size lesser to 256 MB, you can upload multiple blobs and assemble them into a single blob, but it's more complicated than a simple multi-part upload like with S3.

What is an Object Storage

From Wikipedia:

Object storage is a computer data storage architecture that manages data as objects, as opposed to other storage architectures like file systems which manage data as a file hierarchy and block storage which manages data as blocks within sectors and tracks.

Each object typically includes the data itself, a variable amount of metadata, and a globally unique identifier. Object storage can be implemented at multiple levels, including the device level (object storage device), the system level, and the interface level.

In each case, object storage seeks to enable capabilities not addressed by other storage architectures, like interfaces that can be directly programmable by the application, a namespace that can span multiple instances of physical hardware, and data management functions like data replication and data distribution at object-level granularity.

Object-storage systems allow retention of massive amounts of unstructured data.

In my case, I mostly use object storage for storing attachments, backups and other unstructured data.

What is Minio

Minio is an open source object storage server with Amazon S3 compatible API.

You can use Minio for building your own distributed object storage, or you can use it for adding an S3 compatible API to a storage backend like Azure Blob Storage, Google Cloud Storage or a NAS.In this blog post, we will use an Azure Blob storage with Minio.

Azure limitation

- Can't have ACL on an object, only on the container (bucket) or on the account

- Can't have keys with many account storage access, nor different keys with different access for one account storage

- Do not have plenty of tools as AWS S3 have

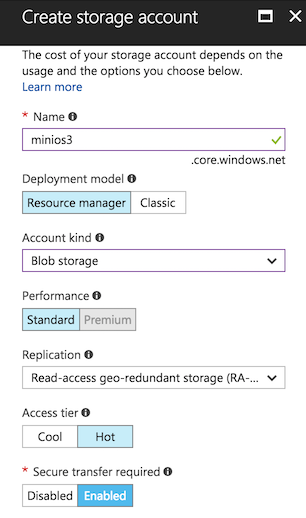

Configuring an Azure blob storage

We will add a new storage account storage. The name will be the access key.

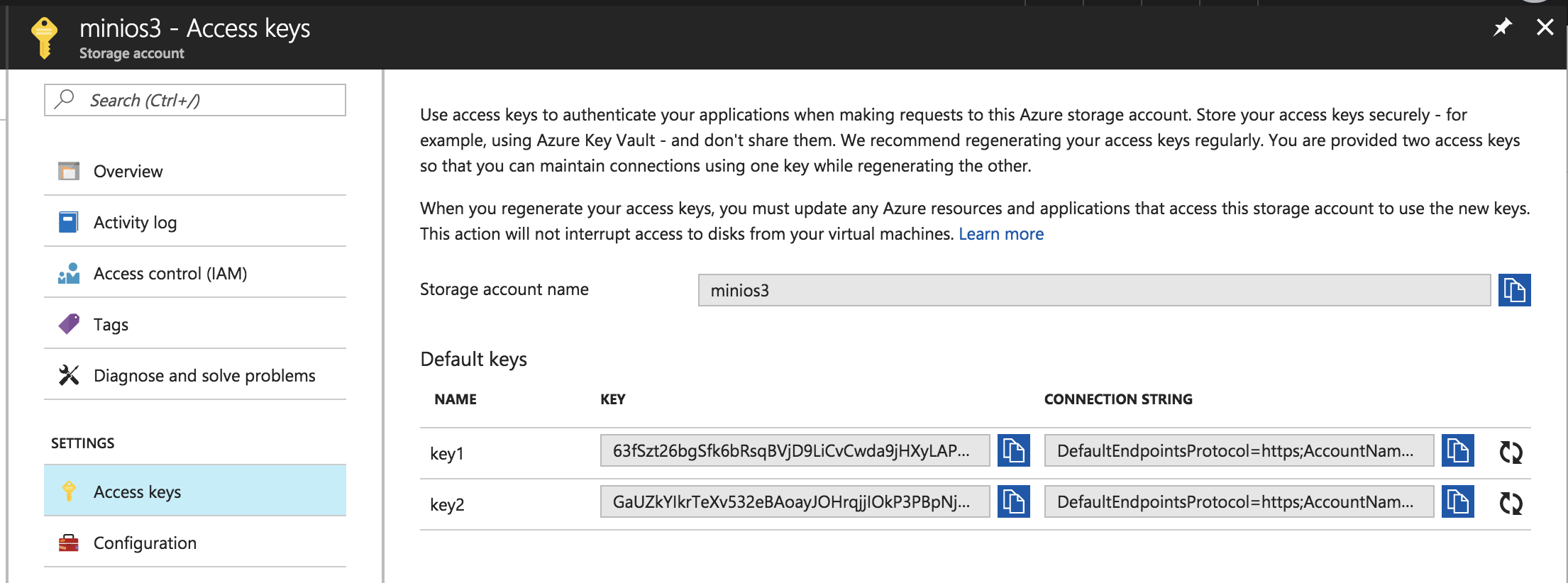

Once the Account storage is created, we will need one of the Access keys:

Configuring Minio with Docker

Running Minio as an S3 gateway for Azure is at simple as:

docker run \

-p 9000:9000 \

--name azure-s3 \

-e "MINIO_ACCESS_KEY=storage_account_name" \

-e "MINIO_SECRET_KEY=storage_account_key" \

minio/minio gateway azure

If you want to start Minio on a swarm, you can use this stack files:

version: "3"

services:

minio_with_traefik:

image: minio/minio

command: gateway azure

environment:

MINIO_ACCESS_KEY: "storage_account_name"

MINIO_SECRET_KEY: "storage_account_key"

networks:

- webgateway

deploy:

labels:

- "traefik.port=9000"

- "traefik.frontend.rule=Host:minio.mydomain.com"

replicas: 3

restart_policy:

condition: on-failure

minio:

image: minio/minio

command: gateway azure

environment:

MINIO_ACCESS_KEY: "xxx"

MINIO_SECRET_KEY: "xxx"

ports:

- 9001:9000

deploy:

replicas: 3

restart_policy:

condition: on-failure

networks:

webgateway:

driver: overlay

external: true

I give 2 different versions, minio_with_traefik use some custom labels for traefik. With it, we can have some https easily and listen on a standard web port. In this case, Minio can be used for serving assets or other document types to your applications.

s3cmd tools with Minio

You can access your new S3 gateway with the AWS CLI or by using other compatible tools like s3cmd, a popular CLI tools.

s3cmd is now compatible with Minio starting at v2.0.1.

Configuring s3cmd

For configuring s3cmd, you have to create a configuration file. By default, s3cmd will use ~/.s3cfg but you can specify a configuration file at runtime s3cmd -c my_configuration.conf

[default]

access_key = storage_account_name

secret_key = storage_account_access_key

bucket_location = us-east-1

cloudfront_host = minio.mydomain.com:9000

host_base = minio.mydomain.com:9000

host_bucket = minio.mydomain.com:9000

multipart_chunk_size_mb = 100

use_https = False

check_ssl_certificate = True

The important configuration keys are:

access_key: The Account Storage namesecret_key: One of the two Account Storage access keysmultipart_chunk_size_mb: By default 15 MB, it's really low, choose something between 15 and 200.cloudfront_host: Minio addresshost_base: Minio addresshost_bucket: Minio addressuse_https: By default, Minio doesn't use HTTPs

If you expose Minio on port http/80 or https/443, you can skip the port.

Conclusion

We use one Minio gateway in production. It's an internal gateway for our backups, and we use s3cmd for uploading them without issues.

We try to have a second gateway for exposing an object storage using the S3 protocol for our rails application. We added Traefik in-front-of it for easily adding an address on standard HTTPs port and an SSL certificate without effort. But due to the lack of ACL by objects or by patterns, these forces us to rewrite some part of our application for splitting the document into two different storage accounts. During the rewrite, we will check if we can't use the Storage Account directly without using Minio.

If you find a typo, have a problem when trying what you find on this article, please contact me!