Docker on Azure, how to build your own Swarm cluster

This post will be a quick review of how we built our Docker Swarm cluster on Azure and how you can do the same!

First of all, we want to have secret management in Swarm, and as of today (October 2017), only CoreOS Alpha have a version of Docker >= 1.13.

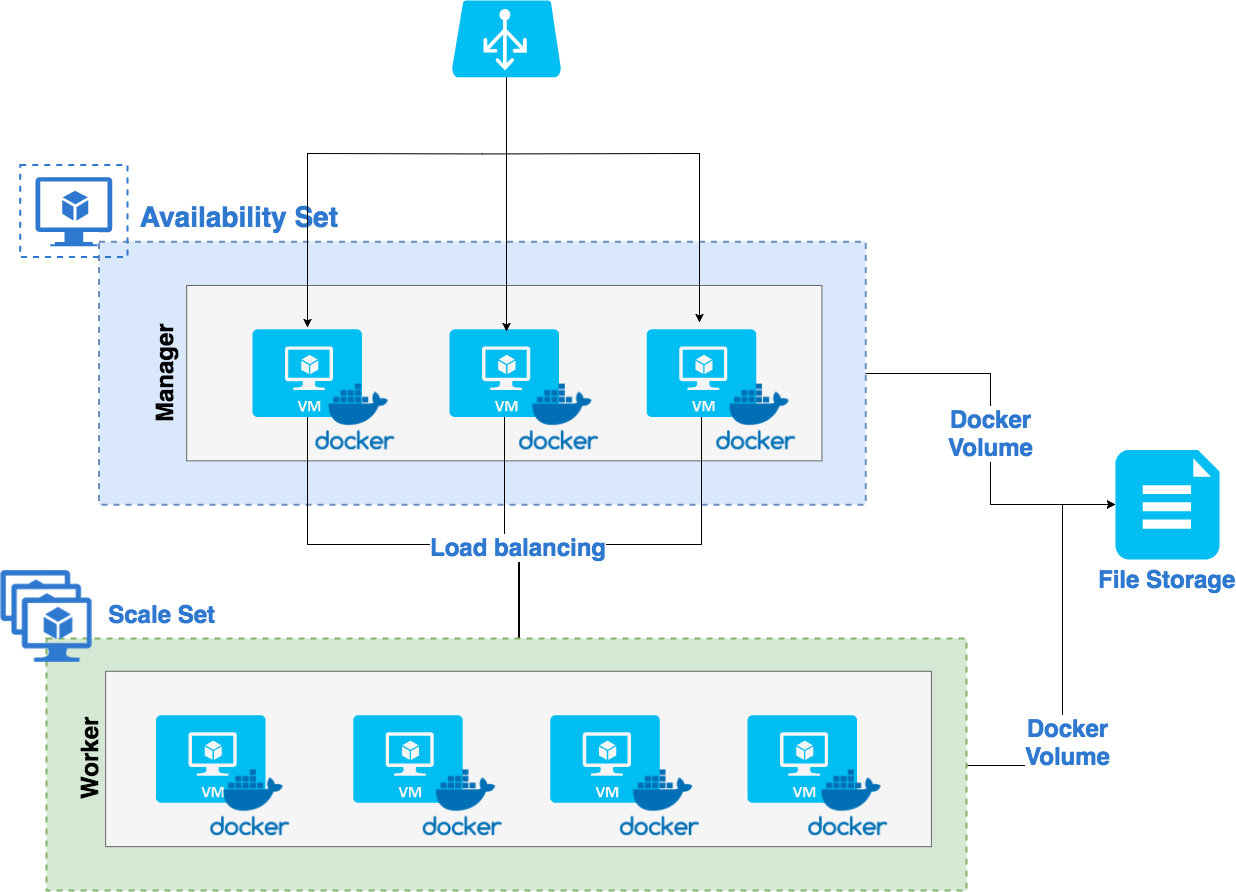

Infrastructure

We want something resilient and with HA. We will use a layer 4 load-balancer for balancing traffic between our own layer 7 load balancer hosted on our Manager nodes.

- For the manager nodes, we won't use a Scale Set because we don't need to scale them, so we use a simple Availability Set.

- For our workers, we can scale them with a Scale Set.

- For our share volumes between our services, we will use an Azure File Storage.

Use shared volume with Docker

Having a shared volume across nodes in a Docker Swarm wasn’t enjoyable to play with. With Docker on Azure, I tried to use Cloudstor and an Azure Storage, instead of a custom (complex) solution.

Cloudstor

Cloudstor is a modern volume plugin built by Docker. It comes pre-installed and pre-configured in Docker Swarm on Azure.

Both Swarm mode tasks and Docker containers can mount a volume created with Cloudstor for data persistence.

Cloudstor relies on shared storage infrastructure provided by Azure

Specifically File Storage shares exposed over SMB.

Note: Direct-attached storage (which is used to satisfy very low latency / high IOPS requirements) is not yet supported

Installing Cloudstor

If Cloudstor is not installed, you need to find the latest version here

Note: We will use the 17.05.0-ce-azure2 version in our example, feel free to replace with the current version

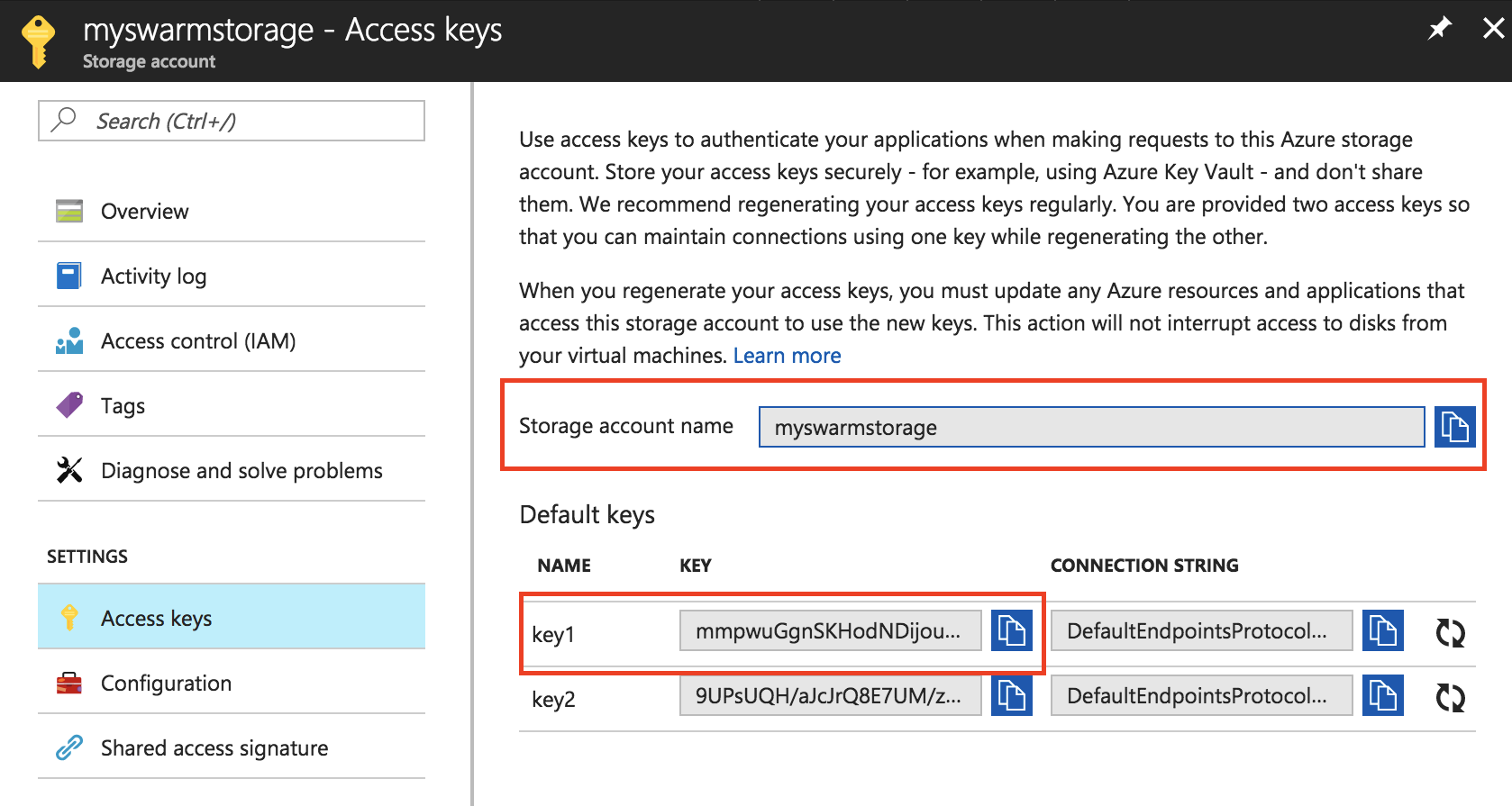

To configure the plugin, you need a Storage Account on Azure name and access key. You can find on your Storage Account > Access Keys page:

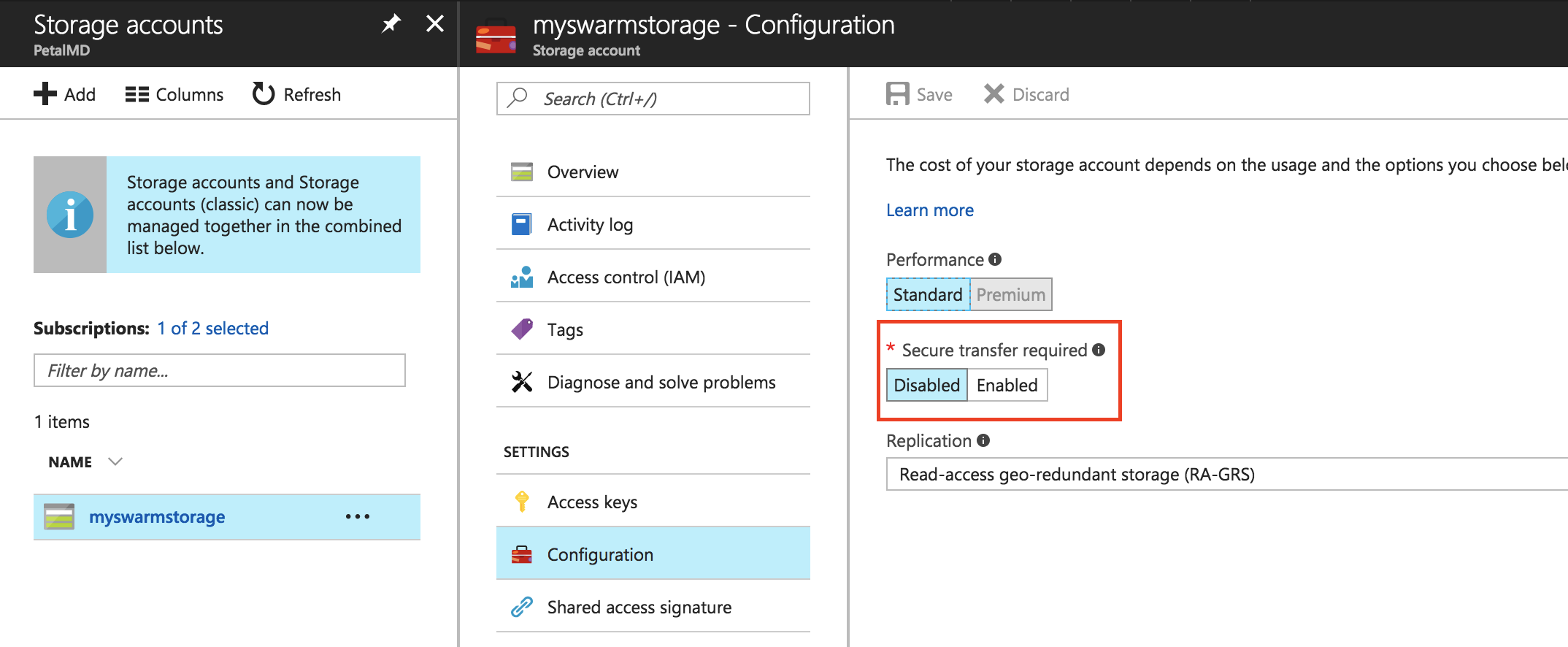

Note: If you use Docker on Linux, the Account Storage should have

Secure transfer requireddisabled, Linux does not support it for now.

On each node of your swarm, install the plugin:

docker plugin install docker4x/cloudstor:17.05.0-ce-azure2 \

--alias cloudstor:azure \

CLOUD_PLATFORM=AZURE \

AZURE_STORAGE_ACCOUNT_KEY="mmpwuGgnSKHodND...." \

AZURE_STORAGE_ACCOUNT="myswarmstorage"

Plugin "docker4x/cloudstor:17.05.0-ce-azure2" is requesting the following privileges:

- network: [host]

- mount: [/dev]

- allow-all-devices: [true]

services:

- capabilities: [CAP_SYS_ADMIN CAP_DAC_OVERRIDE CAP_DAC_READ_SEARCH]

Do you grant the above permissions? [y/N] y

17.05.0-ce-azure2: Pulling from docker4x/cloudstor

1f90a29ccfcb: Verifying Checksum

1f90a29ccfcb: Download complete

Digest: sha256:aa2ae6026e8f5c84d3992e239ec7eec2c578090f10528a51bd8c311d5da48c7a

Status: Downloaded newer image for docker4x/cloudstor:17.05.0-ce-azure2

Installed plugin docker4x/cloudstor:17.05.0-ce-azure2

Configuring a load balancer

Azure

For the probe, we don't have a lot of option. We can use the ping API of Træfik or try to load the dashboard.

I will use the Ping API: my-traefik.com:8080/ping should respond a 200 HTTP code with OK as content

For the ports, 3 simple rules:

- Port 80 to port 80

- Port 443 to port 443

- Port 8080 to port 8080

Træfik

Træfik (pronounced like traffic) is a modern HTTP reverse proxy and load balancer. It's designed to deploy microservices with ease.

- Supports several backends/engine (Docker, Kubernetes, Amazon ECS, and more)

- No dependencies, single binary made in Golang

- Tiny official Docker image

- Hot-reloading of configuration, no need to restart the process

- Websocket, HTTP/2, GRPC ready

- Let’s Encrypt support (Automatic HTTPS with renewal)

- High Availability with cluster mode (beta)

- And more...

We can have Træfik installed on any nodes and expose the ports 80 and 443, the routing mesh will redirect traffic to the right container. However, by using it we lose the client IP.

Also, we want Traefik to listen to Docker events and change the configuration if a new service is created or when a container changes. You can proxify the manager Docker sockets, or you can have Traefik running on our managers. We prefer to have Traefik running on our managers, to have a better KISS solution.

Deploying Traefik

Note: An updated version of how to deploy traefik was posted here: Using Traefik as your load-balancer in Docker Swarm with Let's Encrypt.

A simple stack file will be:

version: "3.2"

services:

traefik:

image: traefik

command:

- --web

- --docker

- --docker.swarmmode

- --docker.domain=petalmd.com

- --docker.watch

volumes:

- /var/run/docker.sock:/var/run/docker.sock

networks:

- webgateway

- traefik

ports:

- target: 80

published: 80

mode: host

- 8080:8080

deploy:

mode: global

placement:

constraints:

- node.role == manager

restart_policy:

condition: on-failure

networks:

webgateway:

driver: overlay

external: true

We can check the dashboard page on http://my-docker-swarm:8080/ and see if some of our services get registered.

But if we want to have some HTTPs and automatic certificate generation?

Add Let's Encrypt and HTTPs

We will update our stack file by checking the documentation:

services:

traefik:

command:

- [...]

- --entrypoints="Name:http Address::80 Redirect:https"

- --entrypoints="Name:https Address::443 TLS"

- --defaultEntrypoints=https

- --acme

- --acme.storage=/etc/traefik/acme/acme.json

- --acme.entryPoint="https"

- --acme.onHostRule=true

- --acme.onDemand=false

- --acme.email=me@example.com

ports:

[...]

- target: 443

published: 443

mode: host

volumes:

- [...]

- traefik-acme:/etc/traefik/acme

volumes:

traefik-acme:

driver: cloudstor:azure

driver_opts:

share: traefik-acme

filemode: 0600

This solution works, but we have some concurrency problem, each node generates the SSL certificate with ACME thus you can easily burst your Let's Encrypt hourly limit.

A better solution is to have Traefik running in HA/Cluster mode.

Configuring a Traefik cluster

Simple, we just need to have the configuration in a KV store!

We will use Consul as our K/V store

Run Consul

Change our stack file for adding consul and a network for Consul and Traefik:

services:

traefik:

networks:

-[...]

- traefik

consul:

image: progrium/consul

command: -server -bootstrap -ui-dir /ui

networks:

- traefik

networks:

traefik:

driver: overlay

Start Traefik

services:

traefik:

image: traefik

command:

- [...]

- --consul

- --consul.endpoint=consul:8500

- --consul.prefix=traefik

networks:

- webgateway

- traefik

consul:

image: progrium/consul

command: -server -bootstrap -ui-dir /ui

networks:

- traefik

networks:

webgateway:

driver: overlay

external: true

traefik:

driver: overlay

Final Traefik stack file

version: "3.2"

services:

traefik:

image: traefik

command: --web --docker --docker.swarmmode --docker.domain=petalmd.com --docker.watch --entrypoints="Name:http Address::80 Redirect:https" --entrypoints="Name:https Address::443 TLS" --acme --acme.storage=traefik/acme/account --acme.entryPoint="https" --acme.OnHostRule=true --acme.onDemand=false --defaultentrypoints=https --acme.email=jmaitrehenry@petalmd.com --acme.ondemand --consul --consul.endpoint=consul:8500 --consul.prefix=traefik

volumes:

- /var/run/docker.sock:/var/run/docker.sock

networks:

- webgateway

- traefik

ports:

- target: 80

published: 80

mode: host

- target: 443

published: 443

mode: host

- 8080:8080

deploy:

mode: global

placement:

constraints:

- node.role == manager

restart_policy:

condition: on-failure

consul:

image: progrium/consul

command: -server -bootstrap -ui-dir /ui

networks:

- traefik

networks:

webgateway:

driver: overlay

external: true

traefik:

driver: overlay

Start a service and register it to Traefik

version: "3"

services:

kibana:

image: kibana:4.1

environment:

ELASTICSEARCH_URL: "http://x.x.x.x:9200"

networks:

- webgateway

deploy:

labels:

- "traefik.port=5601"

- "traefik.frontend.rule=Host:kibana.petalmd.com"

- "traefik.frontend.auth.basic=user:$$apr1$$fsfds/X$$..."

replicas: 1

restart_policy:

condition: on-failure

networks:

webgateway:

driver: overlay

external: true

Thanks Dorian Amouroux for the correction